Let's see the introduction of these python modules:

Mayavi2 is a general purpose, cross-platform tool for 3-D scientific data visualization. Its features include:

- Visualization of scalar, vector and tensor data in 2 and 3 dimensions.

- Easy scriptability using Python.

- Easy extendibility via custom sources, modules, and data filters.

- Reading several file formats: VTK (legacy and XML), PLOT3D, etc.

- Saving of visualizations.

- Saving rendered visualization in a variety of image formats.

- Convenient functionality for rapid scientific plotting via mlab

The installation with pip3.6 tool:

C:\Python364\Scripts>pip3.6.exe install mayavi

Requirement already satisfied: mayavi in c:\python364\lib\site-packages (4.6.2)

...

C:\Python364\Scripts>pip3.6.exe install moviepy

Collecting moviepy

...

Installing collected packages: tqdm, moviepy

Successfully installed moviepy-0.2.3.5 tqdm-4.28.1First example:

C:\Python364>python.exe

Python 3.6.4 (v3.6.4:d48eceb, Dec 19 2017, 06:54:40) [MSC v.1900 64 bit (AMD64)]

on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import mayavi.mlab as mlab

>>> f = mlab.gcf()

>>> f.scene._lift()

>>>In signal processing, a sinc filter is an idealized filter that removes all frequency components above a given cutoff frequency, without affecting lower frequencies, and has linear phase response. The filter's impulse response is a sinc function in the time domain, and its frequency response is a rectangular function.

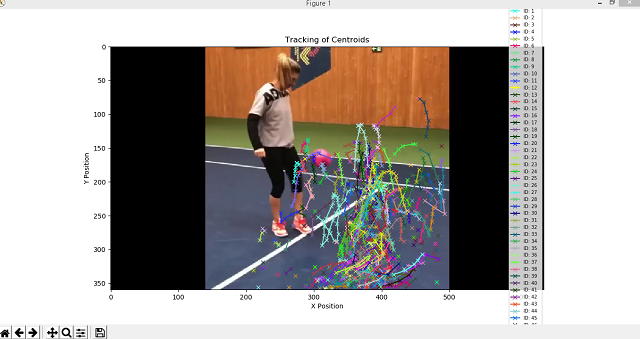

I create the example to show you a sinc function by time.

This is my output (is not the result of the frequency response of the Fourier transform of the rectangular function).

Let's see the source code:

# import python modules

import numpy as np

import mayavi.mlab as mlab

import moviepy.editor as mpy

# duration of the animation in seconds

duration= 2

# create the grid of points for x and y

x, y = np.mgrid[-30:30:100j, -30:30:100j]

# create the size figure

fig = mlab.figure(size=(640,480), bgcolor=(1,1,1))

# create the plane surface

r = np.sqrt(x**2 + y**2)

# this fix issue https://github.com/enthought/mayavi/issues/702

fig = mlab.gcf()

fig.scene._lift()

# create all frames

def make_frame(t):

# clear the area

mlab.clf()

#blend surface by z over time t step is 0.05

z = np.sin(r*t)/r

# create surface

mlab.surf(z, warp_scale='auto')

return mlab.screenshot(antialiased=True)

# create animation movie clip

animation = mpy.VideoClip(make_frame,duration=duration)

# write file like a GIF

animation.write_gif("sinc.gif", fps=20)