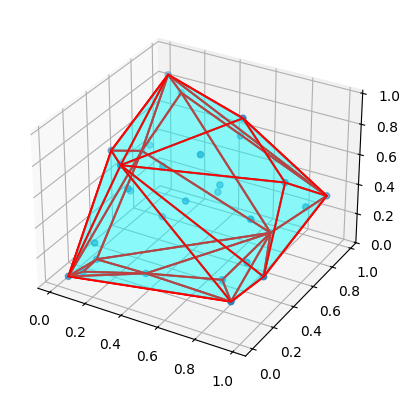

Python code that leverages a language model (such as LLaMA) to generate images featuring basic shapes in 2D or 3D. The script randomly selects shapes, colors, and areas to create diverse visuals. It continuously generates images based on AI-generated code, validates them, and provides feedback for iterative improvements.

This source code can be found on this GitHub project named Ollama-Adaptive-Image-Code-Gen.

You can download this and use these commands to run and test this feature of create and generate images with ollama.

NOTE: Compose up process might upto 20 - 25 Mins. first time. Because it will download all the respective ModelFiles

Let's start:

git clone https://github.com/jaypatel15406/Ollama-Adaptive-Image-Code-Gen.git

Cloning into 'Ollama-Adaptive-Image-Code-Gen'...

Resolving deltas: 100% (30/30), done.

cd Ollama-Adaptive-Image-Code-Gen

Ollama-Adaptive-Image-Code-Gen>pip3 install -r requirements.txt

Collecting ollama (from -r requirements.txt (line 1))

...

Installing collected packages: propcache, multidict, frozenlist, aiohappyeyeballs, yarl, aiosignal, ollama,

aiohttp

Successfully installed aiohappyeyeballs-2.4.8 aiohttp-3.11.13 aiosignal-1.3.2 frozenlist-1.5.0 multidict-6.1.0

ollama-0.4.7 propcache-0.3.0 yarl-1.18.3

Ollama-Adaptive-Image-Code-Gen>python main.py

utility : pull_model_instance : Instansiating 'llama3.1' ...

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling manifest

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 667b0c1932bc

Seams to work but I need more memory:

Ollama-Adaptive-Image-Code-Gen>python main.py

utility : pull_model_instance : Instansiating 'llama3.1' ...

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling manifest

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 667b0c1932bc

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 948af2743fc7

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 0ba8f0e314b4

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 56bb8bd477a5

utility : pull_model_instance : 'llama3.1' Model Fetching Status : pulling 455f34728c9b

utility : pull_model_instance : 'llama3.1' Model Fetching Status : verifying sha256 digest

utility : pull_model_instance : 'llama3.1' Model Fetching Status : writing manifest

utility : pull_model_instance : 'llama3.1' Model Fetching Status : success

=========================================================================================

utility : get_prompt_response : Prompt : Choose the dimension of the shape: '2D' or '3D'. NOTE: Return only the chosen dimension.

ERROR:root: utility : get_prompt_response : Error : model requires more system memory (5.5 GiB) than is available (5.1 GiB) (status code: 500) ...