The reasons I start this tutorial come from google page of SDK for App Engine.

The Google come with these options of the following frameworks can be used with Python programming language:

- Flask;

- Django;

- Pyramid;

- Bottle;

- web.py

- Tornado

First, about this python module I can tell you is a simple web framework and comes with a web.py slogan:

Think about the ideal way to write a web app. Write the code to make it happen.

C:\Python364\Scripts>pip install web.py==0.40-dev1

Collecting web.py==0.40-dev1

Downloading https://files.pythonhosted.org/packages/db/a5/8dfacc190908f9876632

69a92efa682175c377e3f7eab84ed0a89c963b47/web.py-0.40.dev1.tar.gz (117kB)

100% |████████████████████████████████| 122kB 936kB/s

Building wheels for collected packages: web.py

Building wheel for web.py (setup.py) ... done

Stored in directory: C:\Users\catafest\AppData\Local\pip\Cache\wheels\1b\15\12

\4fd91f5ed7e3c8aae085050cce83f72b7ca4f463bf3e67d2b7

Successfully built web.py

Installing collected packages: web.py

Successfully installed web.py-0.40.dev1C:\Python364>python.exe

Python 3.6.4 (v3.6.4:d48eceb, Dec 19 2017, 06:54:40) [MSC v.1900 64 bit (AMD64)]

on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import web

>>>

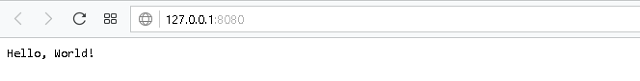

... urls = (

... '/(.*)', 'hello'

... )

>>> app = web.application(urls, globals())

>>>

>>> class hello:

... def GET(self, name):

... if not name:

... name = 'World'

... return 'Hello, ' + name + '!'

...

>>> if __name__ == "__main__":

... app.run()

...

http://0.0.0.0:8080/

127.0.0.1:50542 - - [27/Jan/2019 07:30:28] "HTTP/1.1 GET /" - 200 OK

127.0.0.1:50542 - - [27/Jan/2019 07:30:28] "HTTP/1.1 GET /favicon.ico" - 200 OK