Let's continue our story with the child and the gift.

The child saw the gift and his first thought was the desire to know.

The basic forming unit of a neural network is a perceptron.

He saw that he was not too big and his eyes lit up.

To compute the output will multiply input with respective weights and compare with a threshold value.

Each perceptron also has a bias which can be thought of as how much flexible the perceptron is.

This process is of evolving a perceptron to what a now called an artificial neuron.

The next step is the artificial network and is all artificial neuron and edges between.

He touched him in the corners and put his hand on his surface.

The activation function is mostly used to make a non-linear transformation which allows us to fit nonlinear hypotheses or to estimate the complex functions.

He began to understand that he had a special and complex form.

This artificial network is built from start to end from:

- Input Layer an X as an input matrix;

- Hidden Layers a matrix dot product of input and weights assigned to edges between the input and hidden layer, then add biases of the hidden layer neurons to respective inputs and use this to update all weights at the output and hidden layer to use update biases at the output and hidden layer.

- Output Layer an y as an output matrix;

Without too much thoughts he began to break out of the gift in the order in which he touched it.

This weight and bias of the updating process are known as back propagation.

To computed the output and this process is known as forward propagation.

Several moves were enough to complete the opening of the gift.

He looked and understood that the size of the gift is smaller, but the gift was thankful to him.

This forward and back propagation iteration is known as one training iteration named epoch.

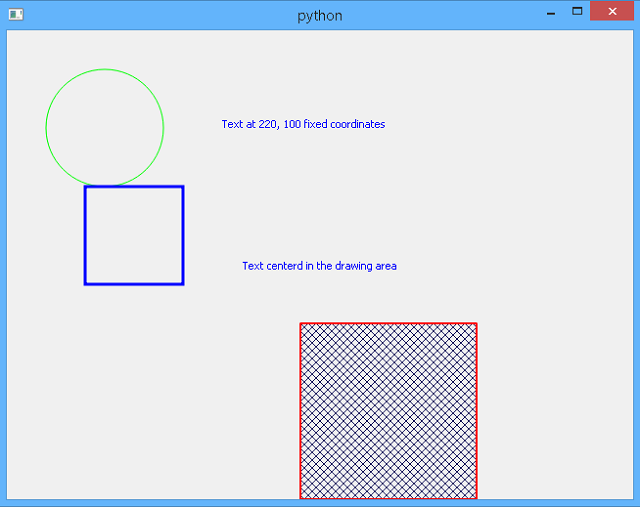

The next example I created from an old example I saw on the internet and is the most simple way to show you the steps from this last part of the story:

##use an neural network in pytorch

import torch

#an input array

X = torch.Tensor([[1,0,1],[0,1,1],[0,1,0]])

#the output

y = torch.Tensor([[1],[1],[0]])

#the Sigmoid Function

def sigmoid (x):

return 1/(1 + torch.exp(-x))

#the derivative of Sigmoid Function

def derivatives_sigmoid(x):

return x * (1 - x)

#set the variable initialization

epoch=1000 #training iterations is epoch

lr=0.1 #learning rate value

inputlayer_neurons = X.shape[1] #number of features in data set

hiddenlayer_neurons = 3 #number of hidden layers neurons

output_neurons = 1 #number of neurons at output layer

#weight and bias initialization

wh=torch.randn(inputlayer_neurons, hiddenlayer_neurons).type(torch.FloatTensor)

print("weigt = ", wh)

bh=torch.randn(1, hiddenlayer_neurons).type(torch.FloatTensor)

print("bias = ", bh)

wout=torch.randn(hiddenlayer_neurons, output_neurons)

print("wout = ", wout)

bout=torch.randn(1, output_neurons)

print("bout = ", bout)

for i in range(epoch):

#Forward Propogation

hidden_layer_input1 = torch.mm(X, wh)

hidden_layer_input = hidden_layer_input1 + bh

hidden_layer_activations = sigmoid(hidden_layer_input)

output_layer_input1 = torch.mm(hidden_layer_activations, wout)

output_layer_input = output_layer_input1 + bout

output = sigmoid(output_layer_input1)

#Backpropagation

E = y-output

slope_output_layer = derivatives_sigmoid(output)

slope_hidden_layer = derivatives_sigmoid(hidden_layer_activations)

d_output = E * slope_output_layer

Error_at_hidden_layer = torch.mm(d_output, wout.t())

d_hiddenlayer = Error_at_hidden_layer * slope_hidden_layer

wout += torch.mm(hidden_layer_activations.t(), d_output) *lr

bout += d_output.sum() *lr

wh += torch.mm(X.t(), d_hiddenlayer) *lr

bh += d_output.sum() *lr

print('actual :\n', y, '\n')

print('predicted :\n', output)

The result is for 100 and 1000 epoch value and show us how close is the

actual input (1,1,0) to the

predicted results.

See also the

weight and

bias initialization of the artificial network is created random by

torch.randn.

If I added this in my story it would sound like this:

The child's thoughts began to flinch in wanting to finish faster and find the gift.

C:\Python364>python.exe pytorch_test_002.py

weigt = tensor([[-0.9364, 0.4214, 0.2473],

[-1.0382, 2.0838, -1.2670],

[ 1.2821, -0.7776, -1.8969]])

bias = tensor([[-0.3604, -0.8943, 0.3786]])

wout = tensor([[-0.5408],

[ 1.3174],

[-0.7556]])

bout = tensor([[-0.4228]])

actual :

tensor([[1.],

[1.],

[0.]])

predicted :

tensor([[0.5903],

[0.6910],

[0.6168]])

C:\Python364>python.exe pytorch_test_002.py

weigt = tensor([[ 1.2993, 1.5142, -1.6325],

[ 0.0621, -0.5370, 0.1480],

[ 1.5673, -0.2273, -0.3698]])

bias = tensor([[-2.0730, -1.2494, 0.2484]])

wout = tensor([[ 0.6642],

[ 1.6692],

[-0.4087]])

bout = tensor([[0.3340]])

actual :

tensor([[1.],

[1.],

[0.]])

predicted :

tensor([[0.9417],

[0.8510],

[0.2364]])